How to Remove WordPress Duplicate Pages to Get Higher Google Ranking

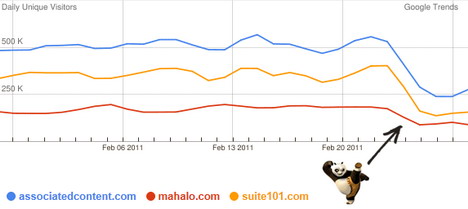

If you think that creating more tags, categories, date and other kind of archive pages help to increase your site ranking in Google search engine, you’re probably wrong! This year, Google rolled out a number of updates called Google Panda to improve Internet search results. The purpose of the changes is clear, they basically aim to lower the rank of “low-quality sites” in search results and return high-quality sites to users. Panda rollout has affected almost 12% of all search results. Many websites have experienced a substantial drop in their traffic. So what’s the first thing you should do to improve your website or blog and avoid being affected by Panda effects? Here are some tips you must know!

To get out of Panda effects, the first thing you will have to do is remove all low quality pages and duplicate contents. Here are 3 useful solutions for your WordPress blog.

Were any of these sites affected by Google Panda update?

Before we go into the topic, you can do a quick search in Google with “site:yourdomain.com” to find out what pages from your site are being indexed in Google search results.

1. Make Use of Robots.txt

Robots.txt is a text file that you can use to instruct Google and other search engines to crawl your site effectively. With this easy method, search engine bots will know what they should and shouldn’t be looking for. To get a higher site and page ranking, you should instruct them to look for content in high quality directories or files while preventing them from accessing any low value or duplicate contents. For example you should disallow content like feeds, trackbacks, comments, tags and other low quality pages from being crawled.

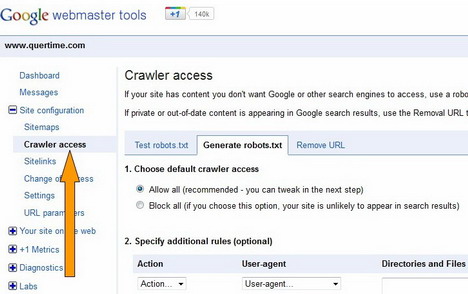

To check the status of your Robots.txt file, you can go to Google webmaster tools > Site configuration > Crawler access. The tool also allows you to generate your own Robots file in minute. Once it’s created, just upload the file to your site’s top-level directory. That’s it!

Here’s the sample of Robots.txt for WordPress blog

Sitemap: https://www.quertime.com/sitemap.xml

User-agent: *

Disallow: /cgi-bin

Disallow: /wp-admin

Disallow: /wp-includes

Disallow: /wp-content/plugins

Disallow: /wp-content/cache

Disallow: /wp-content/themes

Disallow: /trackback

Disallow: /feed

Disallow: /rss

Disallow: /search

Disallow: /archives

Disallow: /comments

Disallow: /comments/feed/

Disallow: /date/

Disallow: /tag/

Disallow: /page/

Disallow: /category/*/*

Disallow: /?*

Disallow: /*?*

Disallow: /index.php

Allow: /wp-content/uploads

2. Install Robots Meta WordPress Plugin

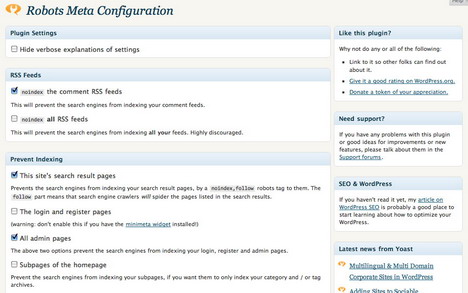

This plugin makes it easy to add the appropriate meta robots tags to your pages, disable unused archives, and nofollow unnecessary links. You can read more about Robots Meta plugin here.

3. Specify Canonical Link to Each Duplicate Version of Page

You can pick a canonical (preferred) URL as the preferred version of the page and indicate to Google by specifying canonical link to all duplicate version of pages.

All you have to do is add a <link> element with the attribute rel=”canonical” to the <head> section of the non-canonical pages. See the example below:

<link rel="canonical" href="http://yourdomain.com/article/abc.html">

Add this extra information to the <head> section of non-canonical URLs.

http://yourdomain.com/article/abc.html?xxx=123http://yourdomain.com/index.php?article=123

With this method, you tell Google that these URLs all refer to the canonical page at http://yourdomain.com/article/abc.html. More information about canonicalization.

Do let us know if you are using other effective methods to remove your duplicate and low quality contents. Should you have any questions or comments, please share in the comments section below.

Tags: google, how to, search engine, seo, wordpress resources