Most Successful On-Page SEO Techniques You Must Know

Thanks to more than 10 years of dishing out penalties, Google has almost caught every manipulative SEO trick in the book. SEO professionals, however, have become so cautious of stirring Google’s wrath that they have forgotten just how much wiggle-room on-page SEO still offers.

This isn’t the article telling you to optimize titles, pick your keywords and work on your alt tags. In the following paragraphs, we provide a list of challenges with on-page SEO and possible solutions to improve your ranking significantly, but legitimately.

To draw a parallel, consider your site as a retail store. Off-page SEO is like the PR and reputation management efforts, while on-page SEO is about what’s in the store – some things are more important than others, but ultimately all of them add up to the eventual success or failure of the business.

It’s common for site owners to ask for quotes for off-page SEO only; leaving their pages poor optimized which ends up being counterproductive. One quick look through the site and a professional SEO would be able to identify non-SEO compatible elements and coding, which is damaging to SE rankings.

By implementing these on-page SEO techniques, you can make the site stronger, complementing your off-page SEO for better ranking in a shorter period of time.

Having High Page/Site Loading Times

Google is not only interested in whether you have relevant information, but also in how good a user experience you can provide if your site is recommended for users. They want to give their users what they need in as little time as possible.

While you’re looking to retain users on your site for as long as possible, Google wants to provide results that can be accessed within the shortest time possible. Where you have slower page loading times, you’ll likely have a higher bounce rate, and Google takes note of this and gives you a lower rank.

As far as page loading goes, you must consider both desktop and mobile load speeds, which were factors in search algorithms by 2011, meaning they’ve only gotten significant to the algorithm now. Use Google’s tools to analyze how your site performs now e.g. PageSpeed Insights, where a load speed of 70+ is desirable, and GTmetrix, which offers more comprehensive analysis.

Optimize your images and videos to ensure they won’t slow down your site, enable compression by using GZIP and give files expiration dates in the htaccess file using the relevant codes available in many places online.

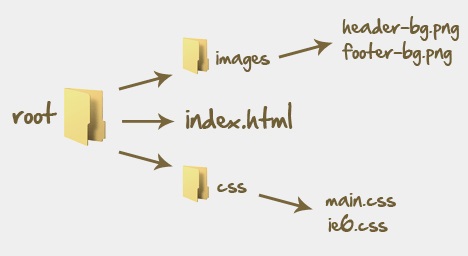

Cluttering up the Root Folder

When Google is crawling your site for just a few seconds, nothing about your on-page elements is insignificant, yet this aspect is one of the most neglected with on-page SEO. It’s a common habit for SEO guys, site designers/builders and owners/managers to throw idle files into the root folders and subfolders without any regard.

All files within your root folder are significant and throwing everything into it will dilute the importance of the relevant files in the folder. Discarding files that are no longer useful into the root folders will mean that they’ll still be considered when Google crawls your site, meaning that part of the voluble time allocated to you will be wasted on these files.

It’s time to run spring cleaning on your root folders and subfolders. You can open a new directory and christen it ‘old-files’ or another relevant name of your choice, then move your unused files there. Don’t forget to update your code with the changes, and you should also sort the media files and subdirectories to have a flowing scheme.

Once you’re done, you can run a tool to check for broken links to identify which links you also need to change rather than manually checking every link. Lastly, in the robot.txt file, include this command:

- disallow: /old-files/

Duplicating Content

Now, while Google will only issue penalties for extreme cases of duplicate content, such as where Google can detect fraudulent intent, duplication of content devalues the content itself and site as a whole.

There are thousands of indexed pages up on offer for indexed results – Google offers access to the first 1,000 (10 x 100 pages). Now, few surfers ever go past the first 10, simply because it is generally accepted that the first page has the most reliable and relevant information, because users trust Google, hence Google cannot afford to disappoint/compromise the users’ experience.

Duplicate content weighs a site down and makes work harder for search engine crawlers. It will take greater effort, in time as well as money, to get a duplicated page to an acceptable ranking position. There are different forms of duplication such as:

- Having different URLs for the same page – with and without ‘www’, with and without index.php, etc, all of which may be indexed in Google.

- Having regular and secure versions for pages i.e. http and https.

- Duplicate page descriptions and titles, which you can easily access on Google Webmaster Tools under Search Appearance>>HTML Improvements.

- Internal pages with parameters – many webmasters have different URLs to aid in tracking and analysis.

Use Copyscape to search for duplicate content and find resources to help you handle them as required.

An Internal Link Structure which is not Flowing

When a search engine bot is crawling a site, it uses internal links to move from one page to the next. It collects all the necessary information until the search time runs out, or it reaches the end. Frequently linking back to certain pages and sub-pages within your site is a way of declaring that they are important.

If you haven’t effectively established internal links to related pages within your site, you’re wasting an opportunity right there. Improve your internal linking scheme and you’re likely to see an immediate improvement in SERP ranking. Without structure, the search algorithm will assign less suitable search queries to the site’s most important pages.

If you’ve got a blog, create links to your most important landing pages in about 20-30 posts – don’t go overboard. Create about 15-20 posts monthly addressing the main subject of your landing pages, and then link the new posts to the older ones and vice versa. This creates a network of crossroads highlighting the relationship/story between your landing pages, older posts and new posts/pages. Of course, make sure it’s high quality content.

Next, identify the strongest pages in your site and link them to your most important landing pages. The idea is to use the strength you have to assist achievement your most important goals.

Tags: google, google pagerank, search engine, seo, tips & tricks, web development resources